The process to install a polling widget on your institution’s homepage is fairly straight forward. I tend to prefer self-hosting solutions, and open source at that. Thankfully in my job we have that luxury. If you’re attempting this with no knowledge of PHP or servers, you might have some issues. I’ll try to explain as best as possible, but comment if you get lost in the process, and I’ll be happy to clarify what I can.

The first step is to find a polling software solution; basically any polling software that creates an html/php page can be embedded. It’s preferred that the page lives behind HTTPS, or secure HTTP connection – so if you’re self-hosting the polling solution as we are, you should put it behind the extra security. Why? Well, Internet Explorer doesn’t handle mixed secure and insecure solutions and will give the end user a pop up with some unclear language that in the end, only adds more hurdles for the user to answer the poll. In fact, Firefox now has similar behaviour (with an even less apparent notification that needs intervention before fixing).

We’re using this polling software: http://codefuture.co.uk/projects/cf_polling/ which serves our purposes quite nicely. It’s doesn’t allow for question types other than multiple choice, so if you need that functionality, you’ll have to choose something else. For our polls, we’ve worked the questions so that they fit this mold. The extra bonus of this one is that it stores all the data in a flat file – not in a database. So you only have one thing to maintain.

Within the PHP code, you can edit the options – the PHP file is well commented and shouldn’t give you any issues. One trick I’ve run into is that the D2L widget editor doesn’t refresh the data well – so if you make an error in the PHP, you should create a new file to upload rather than trying to overwrite, I couldn’t figure out why it wasn’t letting me reset the data collected (I suspect that the flat file is generated using the name of the PHP file, so when you update the PHP, it won’t force a reset of the data captured. Of course, why it wouldn’t overwrite the typo in the one answer, I’m not sure).

Another downside, and it’s a big one if you want to use these numbers as more than a general indicator – is that this solution does not track users. So, if you do choose this route, be aware that this poll sets a cookie on the computer that answers the poll, not necessarily attached to the user who answered the poll – so the same person could answer the poll multiple times. We don’t particularly care about that, only because we’re using it for a general sense of how the community feels on these issues. With large enough data, even with some mischevious numbers, we’d be OK.

You’ll need some basic CSS skills as well to edit how the page will look – there’s three options by default – but I’ve trimmed out the script to not include the extra options we aren’t using. I’ve rewritten the CSS to more accurately reflect the branding and colour scheme that we use at my institution.

I’ve included the text of the script listed above for an example of what we run and how we customize it. If you can’t see it, visit the text on pastebin.

<?

///////////////////////////////////////////////

// include the cf polling class file

include(‘cfPolling/cf.poll.class.php’);

// your poll question

$poll_question =’How well did the Discussion tool stimulate a conversation that improved understanding of the course material?’;

// In this variable you can enter the answers (voting options),

// which are selectable by the visitors.

// Each vote option gets an own variable. Example

$answers[] = ‘did not use’;

$answers[] = ‘a little bit’;

$answers[] = ‘a lot’;

$answers[] = ‘was crucial’;

// Make new poll

$new_poll = new cf_poll($poll_question,$answers);

// (Option)

// if you do not want to use cookies to log if a user has voted.

// if you are not using one_vote there is no need to use this.

// $new_poll -> setCookieOff(); //(new 0.93)

// (Option)

// One vote per ip address (and cookies if not off)

$new_poll -> one_vote();

// (Option)

// Number of days to run the poll for

$new_poll -> poll_for(28);// end in 28 days

// $new_poll -> endPollOn(02,03,2010);// (D,M,Y) the date to end the poll on (new 0.92)

// (Option)

// Set the Poll container id (used for css)

$new_poll -> css_id(‘cfpoll2’);

// chack to see if a vote has been cast

// used if the user has javascript off

$new_poll -> new_vote($_POST);

// echo/print poll to page

echo $new_poll -> poll_html($_GET);

?>

So that’s the backend of things. We currently manually set up a polling question, and will rotate through six different questions (which means six different unique PHP scripts) in a semester. Every three weeks, we prepare a new script page by copying the previous one and editing the end date, questions and answers, and upload it to the server.

Now getting it into a widget in a course (or at the organization level) is dead simple. Create a new widget, edit that widget and get to the HTML code view for the content of that new widget. Once there, put in this code:

<p style="text-align: center;"><iframe src="LOCATION OF YOUR FILE HERE" height="340" width="280" scrolling="no"></iframe></p>

Of course, you’ll substitute wherever the location of the PHP script you’re using is located where I’ve written “LOCATION OF YOUR FILE HERE”. Click Save to save the widget. You won’t be able to preview this widget, so you’ll have to have a bit of faith that your code (and my code) is correct. Add the widget to your homepage, and you’re home for dinner.

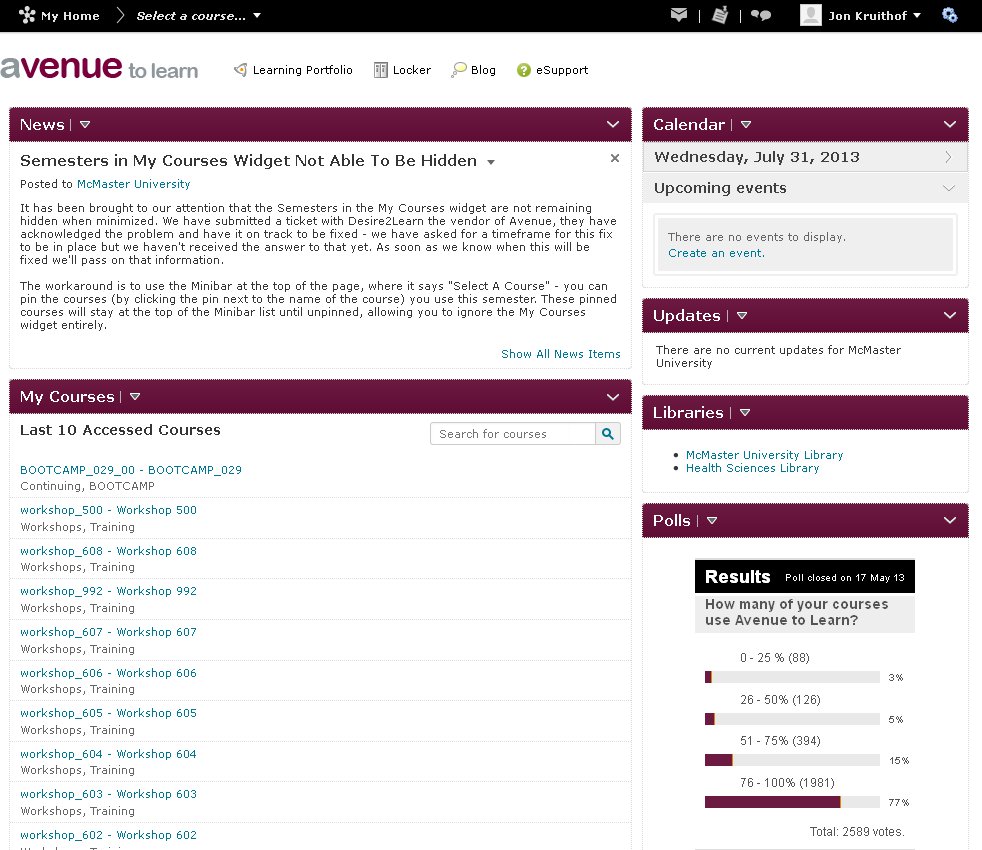

Our experience with this is pretty surprising. The first time we ran the polls there was 36 responses in 10 minutes (during exams), 1450 in 24 hours and 2655 after one week. After three weeks the final tally was 3598. Now remember, that’s votes, not individuals. Even so, consider that each student might only vote as an average of 1.4 times, which might skew the numbers somewhat, even so that’s pretty representational (and corresponds with our internal numbers for the tool we surveyed about).

Here’s what the Poll looks like:

What do we hope to find with this? Well, personally I wanted to see how the Analytics tool use numbers would compare with users self-reporting. Does use of the tool make for an impression of using the tool? Are students even aware of the different tools in D2L?

UPDATE: It looks like the Polling tool that I used is no longer around. I looked for a mirror but there was none found in my extensive search. There are alternatives – which I found through this blog post on polling with PHP and without databases which pointed to this site: http://www.dbscripts.net/poll/ – this may not work for you because it requires server access to the htaccess file. I’ll continue to update this post if other alternatives present themselves.