Even though it’s the weekend, it’s August 8. This is my work anniversary. So I’ll be taking a moment to write about work while I enjoy the evening.

After a long discussion with my direct boss, we decided that I needed to stop doing everything that I do and focus on doing a few things. I can say that it’s a good idea, I’m a bit of a control freak. If you’ve worked with anyone like me, you’ve probably been witness to someone who has opinions, shares them with the drop of a hat, and will doggedly defend those beliefs and continue to circle back to fight for them again and again. Where this becomes a problem is when I feel the insane need to do everything. Redesign training? Check. Read the 100+ page document about the LMS upgrade? Check. Dissect it and rewrite it for our campus? Check. Update websites? Check.

I honestly do want other people to feel they have room to do work without me jumping into what they do. I like to think I’m a good person, and I fully recognize my flaws (and this is a big one). I’d also like to think that I’ve tried to make room for others to do stuff. Maybe I haven’t been as effective in doing that, I don’t know as I can’t speak to how others feel. So I’ve vowed to step back from the LMS administration side of things to focus on the ePortfolio and badging projects that we have going.

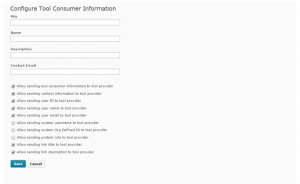

Now, if you pay attention to LMS’s you’ll know that D2L announced that their badging service will be available for clients on continuous delivery (Brightspace version 10.4 and higher) starting September 2015. We’re also expanding the number of University wide ePortfolio software solutions from just the D2L ePortfolio tool, adding another to complement where D2L eP is weak. There’s not been an official announcement, however we’ve started internal training and I should be writing about the process of getting this other software up and running as I think it’ll be quite an accomplishment in the time frames we have set.

Now you may wonder where the D2L ePortfolio tool is weak?

Well, first, let me give you two caveats. One, I’ve given this information to our account manager and I know for a fact it’s gone up the chain to D2L CEO John Baker. I think we’ll see improvements in the tool over the next while. I’m cautiously optimistic that by this time next year D2L’s ePortfolio tool will be improved, with that I keep an interested eye on the Product Idea Exchange inside the Brightspace Community, for developments. The other caveat, is that we’re using ePortfolios in such a way that we want to leverage social opportunities for reflective practice. Frankly this wasn’t something that we knew when we started with ePortfolios, and hence wasn’t part of our initial needs.

OK the weaknesses from my perspective).

1. The visual appeal of the tool is challenged. The web portfolios that are created are OK looking. It needs a visual design overhaul. Many aspects of the tool still bear the visual look of pre-version 10 look of D2L’s products. It also needs to be able to be intuitive to use for students, and part of that falls on the visual arrangement of tools. There’s three sections of the ePortfolio dashboard where you can do “stuff” (menus, the quick reflection box and on the right hand side for filling out forms and other ephemera). I totally understand why you need complexity for a complex tool – especially one designed to be multi-purpose.

2. Learning goals, which is a huge part of reflective practice, are not built into the portfolio process. You can, yes, create an artifact that could represent your learning goal, and associate other artifacts as evidence of achieving that goal – but I’d ask you to engage in doing that process as a user to see why it’s problematic. Many, many clicks.

3. There is a distinct silo effect between the academic side and the personal side of things. If we extrapolate our learning goals to be equivalent to learning outcomes (and I feel they should be) – those learning goals are still artifacts and outcomes/competencies pulled from the courses are labelled something else. Again, I don’t think the design of the ePortfolio tool is aimed at this idea, however, if we’re serious about student centred learning, shouldn’t we be serious about what the student wants to get out of this experience, and treat what they want out of the experience, whatever that is, at the same level as what teachers, or accreditation bodies, or departments, or schools feel they should know?

4. Too many clicks to do things. Six clicks to upload a file as an artifact is too many.

5. Group portfolios are possible, but so challenging to do, that we’ve instituted a best practice that you organize it socially and make one person responsible for collecting artifacts and submitting the portfolio presentation. Even if you want to take on the challenge, when you share a presentation with another person and give them edit rights, the tool still doesn’t let you edit in the sense that you would expect the word edit to mean. You can add your stuff to the presentation, but can’t do squat with anything else in the presentation. In some ways it makes sense, but functionally it’s a nightmare. What if your group member is a total tool and puts their about me stuff on the wrong page? What if they made an error that you catch, why do you have to make them fix it instead of the sensible thing and being able to fix it yourself?

With all that said, people tend to like the tool once they figure it out. The problem is, that many don’t get past that hurdle without help, and there’s only so much help to go around.